Server-Sent Events (SSE) is a server push technology that allows a client to receive automatic updates from a server via an HTTP connection. It provides a simple way to send event stream data from the server to the client.

When using SSE, the client sends a trivial request and receives a response with the Content-Type: text/event-stream

header, without the Content-Length header. The client knows that it will receive messages in the following format:

event: userconnect

data: {"username": "bobby", "time": "02:33:48"}

event: usermessage

data: {"username": "bobby", "time": "02:34:11", "text": "Hi everyone."}

Each pair of event (optional) and data (required) is sent to the client from the server one by one. These pairs may not arrive at the same time. It is the client's responsibility to handle each pair and update the UI accordingly.

ChatGPT provides an HTTP API that supports SSE.

ChatGPT-Next-Web is a web application that wraps the ChatGPT API and retains the SSE feature. It provides an easy-to-use UI.

The following animation demonstrates how SSE works in a web application and how event stream data is sent to the client:

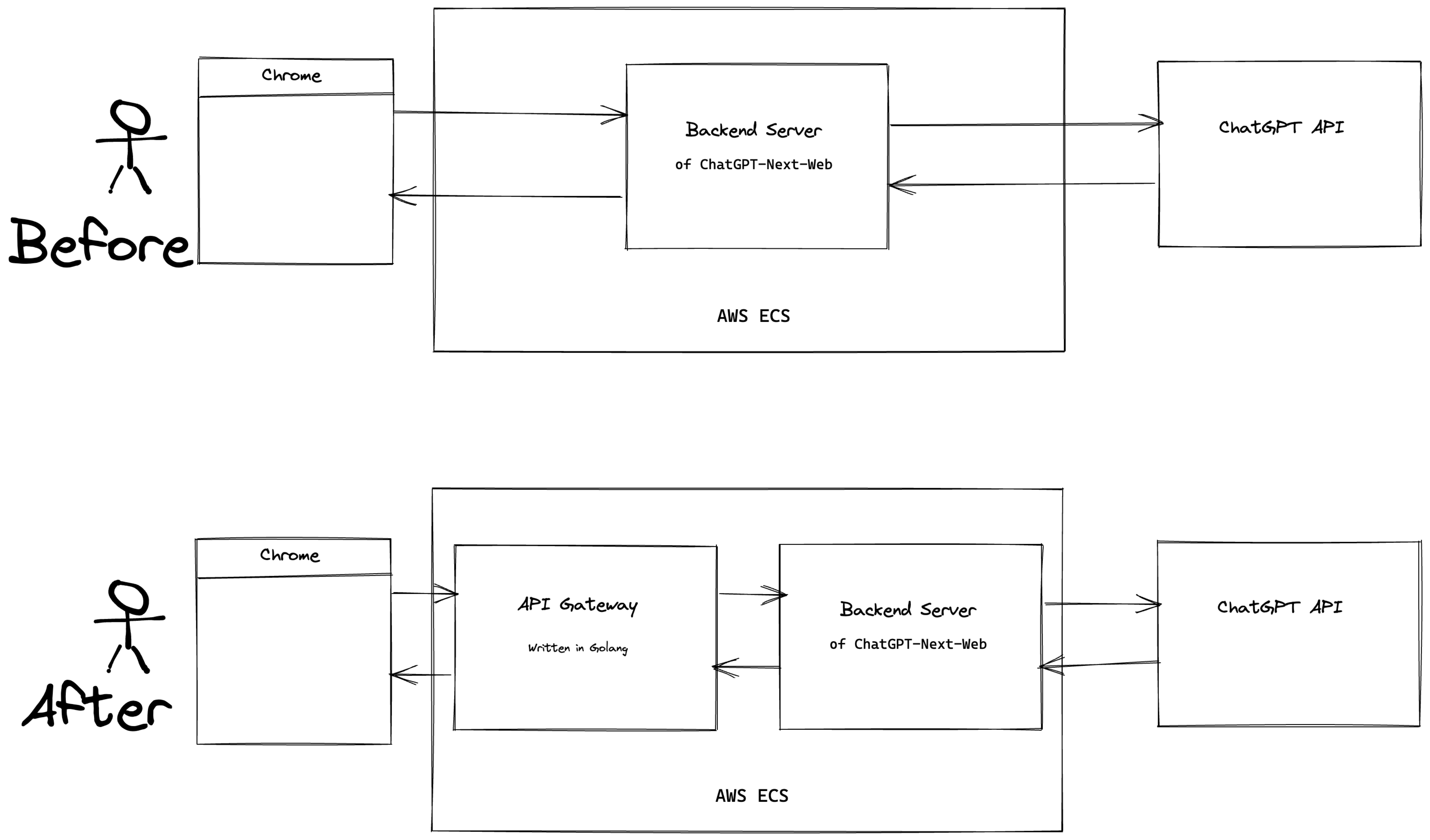

SSE worked well until I introduced an API gateway in Go between the frontend and backend servers of ChatGPT-Next-Web, as shown in the diagram below:

After adding the API gateway, SSE became laggy:

I suspected that the API gateway was causing the issue and interfering with the data flow.

The main purpose of this API gateway (Open in GitHub TODO) is to apply various middlewares to HTTP requests and responses passing through the gateway. These middlewares are used for tasks such as authentication, instrumentation, and routing of HTTP requests.

A middleware is a simple function that reads and modifies HTTP requests and responses. Here's an example of a middleware function:

func HelloHandler(w http.ResponseWriter, r *http.Request) {

w.Write([]byte("Hello, World!"))

}

To investigate the issue, I first checked the TCP_NODELAY option and confirmed that it was enabled by default.

Next, I removed all the middlewares and added them back one by one, observing the impact on SSE. I discovered an

interesting finding: the GeneralMetrics middleware was causing the SSE lag.

Here is the code for the GeneralMetrics middleware:

package main

var (

requestCounter = prometheus.NewCounterVec(

prometheus.CounterOpts{Name: "http_requests_total"},

[]string{"service", "host", "path", "status"},

)

)

func GeneralMetrics(serviceName string) Middleware {

prometheus.Register(requestCounter)

return func(ctx context.Context, rw http.ResponseWriter, req *http.Request, next pkg.ContextualHandleFunc) {

mrw := MetricsResponseWriter{rw, http.StatusOK}

defer func() {

requestCounter.WithLabelValues(serviceName, req.Host, req.URL.Path, strconv.Itoa(mrw.statusCode)).Inc()

}()

next(ctx, &mrw, req)

}

}

type MetricsResponseWriter struct {

http.ResponseWriter

statusCode int

}

func (mrw *MetricsResponseWriter) WriteHeader(statusCode int) {

mrw.ResponseWriter.WriteHeader(statusCode)

mrw.statusCode = statusCode

}

The issue lies in the MetricsResponseWriter struct.

The MetricsResponseWriter wraps rw *http.ResponseWriter and passes it to the next middleware. However, it only

declares the http.ResponseWriter interface, while rw *http.ResponseWriter holds an

http.response

struct that implements multiple interfaces.

In this API gateway, SSE fails because the MetricsResponseWriter does not implement the http.Flusher interface,

which is required by the

ReverseProxy

used in the gateway.

To resolve this issue, it is not necessary to remove the Prometheus metrics code. Since MetricsResponseWriter contains

an http.response, which already implements the http.Flusher interface, we can simply declare the http.Flusher

interface for the MetricsResponseWriter:

package main

var (

requestCounter = prometheus.NewCounterVec(

prometheus.CounterOpts{Name: "http_requests_total"},

[]string{"service", "host", "path", "status"},

)

)

func GeneralMetrics(serviceName string) Middleware {

prometheus.Register(requestCounter)

return func(ctx context.Context, rw http.ResponseWriter, req *http.Request, next pkg.ContextualHandleFunc) {

mrw := MetricsResponseWriter{rw.(metricsResponse), http.StatusOK}

defer func() {

requestCounter.WithLabelValues(serviceName, req.Host, req.URL.Path, strconv.Itoa(mrw.statusCode)).Inc()

}()

next(ctx, &mrw, req)

}

}

type MetricsResponseWriter struct {

metricsResponse

statusCode int

}

func (mrw *MetricsResponseWriter) WriteHeader(statusCode int) {

mrw.metricsResponse.WriteHeader(statusCode)

mrw.statusCode = statusCode

}

type metricsResponse interface {

http.ResponseWriter

http.Flusher

}

However, this is not the end of the problem. If someone wants to write

an HTTPS proxy, they

will need to declare the http.Hijacker interface for the MetricsResponseWriter. In that case, the metricsResponse

interface should be expanded:

type metricsResponse interface {

http.ResponseWriter

http.Flusher

http.Hijacker

}

Since the http.response struct in Go 1.20.2 implements 15 interfaces, it may be a good idea but a terrible approach to

declare all 10 public interfaces for the MetricsResponseWriter to handle any future problems:

type metricsResponse interface {

http.ResponseWriter

http.Flusher

http.Hijacker

// Declare other interfaces here

}